Abstract

Classifier ensembles are more and more often applied for technical diagnostic problems. When dealing with vibration signals a lot of point features can be extracted. In this situation there is the problem of how to choose the best classifiers in the ensemble. One solution is the use of measures that quantify diversities amongst the classifier outputs. While there is no general diversity definition and method of calculation, the selection of the correct measure is a vital task. In this paper research is presented on the application of classifier ensembles built with Bagging for the detection of rotating machinery faults. It was found that there is a relationship between classification accuracy and the diversity measures.

1. Introduction

Fault detection and isolation is still a vital topic. Various methods can be used to deal with this type of task. Determination of the relationship between measured symptoms and faults can be achieved using pattern recognition methods, thus diagnosis is performed through classification. It is a well-known approach, but in many real life applications there are some serious problems. One of the most crucial is the fact that there are a small number of data samples over which the classifier can be trained. One of the possible solutions used to overcome this drawback is the application of classifier ensembles. In simple approaches such as Bagging or Boosting, large numbers of individual classifiers are drawn to build the ensemble. Large numbers of redundant classifiers that can appear in the initial classifier pool can be removed from the ensemble without decreasing its capability of highly accurate prediction [1, 2]. Therefore, a method is required that will find the best subset of an ensemble. One of the theoretically possible solutions is the selection using diversity between individual classifier outputs. Unfortunately, there is no strict definition of diversity; therefore, there is no universal technique to quantify this property of classifier ensemble.

In this paper research on the application of common diversity, measured to prune the classifier ensembles for vibration diagnostic of rotating machinery, is presented.

1.1. Classifier ensembles

Bagging or bootstrap aggregation is a technique that can be used with many classification methods to reduce the variance associated with prediction, and thereby improve the prediction process. The key idea is to generate many bootstrap samples from the available training data. Then some classification method is applied to each bootstrap sample. Finally, the classification results from the individual classifiers results are combined by simple voting, to obtain the overall prediction, with the variance being reduced due to averaging. To take advantage of this method, the base classifier must be unstable, i.e. minor changes in the training set can lead to major changes in the classifier output.

1.2. Diversity measures

Classifier ensembles are useful and outperform a single classifier in cases when individual classifiers are good in terms of accuracy. At the same time there should be some diversity amongst them, because in the case of full agreement between the classifiers there will be no benefit in using an ensemble instead of a single classifier. Although the diversity is intuitively well understood, there is no general and universal measure that would be suitable for classification tasks of differing complexity. There are many measures elaborated upon to measure diversity amongst classification output [3-5]. Three measures were selected, to be validated in the task of rotating machinery fault detection and isolation, based on classification of the features describing vibration signals.

• Double Fault agreement (DF, lower value higher diversity) [3],

• Kohavi-Wolpert variance (KW, greater value higher diversity) [4],

• Entropy measure (Ent, greater value higher diversity) [5].

Chosen diversity measures are valid for oracle classifier outputs.

2. Case study

In order to reveal correspondence between diversity measures and classification performance using the Bagging ensemble build technique, an active diagnostic experiment was constructed.

2.1. Test setup

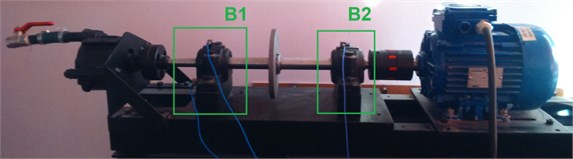

A set of vibration signals recorded during an active diagnostic experiment, performed on a laboratory stand containing a model of rotating machinery (Fig. 1), was used. The following machinery conditions were simulated: S1 – no fault, S2 – small unbalance (6.21 g·m), S3 – large unbalance (12.43 g·m), S4 - misalignment (0.5 mm), S5 – pump with 10 % throttling. For each condition 20 realizations of the signal were acquired.

The accelerometers used to record the vibration signals were mounted on the bearing housings. A triaxial piezoelectric accelerometer (PCB Piezotronics T356A32) was mounted on the B1 housing, and the vibrations in the axial and radial directions were measured. Two accelerometers (PCB Piezotronics T338B30) were installed on the B2 housing. The first recorded measurements in the radial (Z) direction and the second in the axial (Y) direction. The signals were recorded by LMS SCADAS Mobile and processed in LMS Test.Lab v12 software. The Matlab environment was used to analyze the acquired signals. The acquired signals were assessed using the following point estimators: mean, RMS, crest factor, and kurtosis.

Fig. 1Test stand

The classification process was performed in the following manner. First a set of 20 individual classifiers were created using Bagging. Then to fulfill the Bagging procedure, majority voting vas performed to obtain the final decision form classifier ensemble. From the classifier pool 1, 2, 5, 15, or 20 classifiers were selected for voting. Exhaustive validation of classifier ensembles was performed, with 20, 190, 15504, 15204, and 20 combinations of individual classifiers depending on the classifier pool size. The K-Nearest Neighbors (KNN) classifier was selected as the base classifier. For each ensemble, diversity between member classifiers was calculated. The research was performed for fault detection, where class C1 = {S1} and C2 = {S2, S3, S4, S5}. The mean classification error was chosen as the parameter describing the classification accuracy.

2.2. Results

Classification was performed to detect machine faults. According to the testing procedure five classifier pool were generated and validated using -fold cross validation, where 10. The results obtained are presented in Table 1. It can be seen that the mean value of classification error is on a similar level for all combinations. At the same time, the minimal error was at the lowest level when there were 2 or 5 classifiers that formed the pool used to build the ensemble. To investigate if there is a correspondence between diversity and classification accuracy, the correlation between those parameters was calculated. It can be noted, that there is no strong correspondence between classification error and diversity, especially when the number of classifiers in the ensemble increases. Nevertheless, KW and Ent were found to be the most promising in the studied case.

Table 1Classification error for different classifier ensembles

Classification error | Number of member classifiers | ||||

1 | 2 | 5 | 15 | 20 | |

Mean | 0.22 | 0.21 | 0.23 | 0.24 | 0.25 |

Max | 0.30 | 0.27 | 0.27 | 0.25 | 0.25 |

Min | 0.15 | 0.12 | 0.12 | 0.22 | 0.25 |

Table 2Correlation between diversity measures and mean classification error

Diversity measure | Number of member classifiers | |||

1 | 2 | 5 | 15 | |

DF | – | 0.592 | 0.629 | 0.556 |

KW | 0.996 | 0.679 | 0.323 | –0.080 |

Ent | 0.993 | 0.668 | 0.319 | –0.091 |

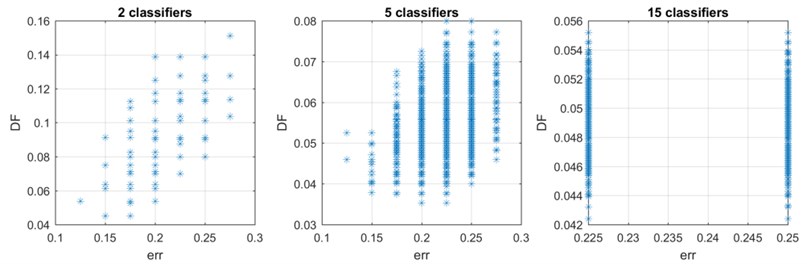

Fig. 2Correspondence between DF measure and classification error for ensembles with 2, 5 and 15 classifiers

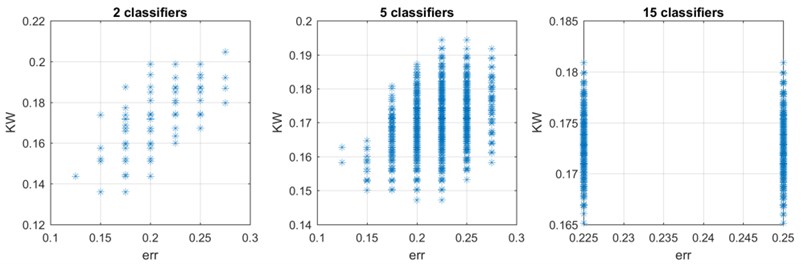

Fig. 3Correspondence between KW measure and classification error for ensembles with 2, 5 and 15 classifiers

To prove if there is a relationship between the selected diversity measures and the classification error, consecutive pairs of both parameters were plotted on a 2D plane. for DF measures, in the case of 2 and 5 classifiers in the ensemble, the relationship is relatively strong (Fig. 2). It was found that in general higher diversity (lower DF value) led to better classification accuracy (lower error). in the case of 15 classifiers, there was a low spread between the classification error for all possible combinations of classifiers, thus it cannot be stated that the relationships also hold for this ensemble. the opposite situation is observed for KW measures, where lower diversity leads to higher classification accuracy (Fig. 3). This situation occurs for both 2 and 5 classifiers, and it only confirms that a blind choice of diversity measures is not possible, when it should be applied for classification of machinery faults.

3. Conclusions

The application of a simple KNN classifier and Bagging procedure leads to a quite good classification performance. Adding additional classifiers into the detection process influences the classification accuracy, but the selection of the member classifiers number is also a nontrivial task. Moreover, the application of Kohavi-Wolphert variance, as the measure of diversity can lead to the selection of member classifiers that together form the best ensemble. Although the relationship is quite strong, there is no possibility to indisputably state that the classifiers with the highest diversity will form the best ensemble. in this context additional studies are needed to elaborate on the new measure, which will take into account the specification of the vibration signals.

References

-

Partridge D., Yates W. B. Engineering multiversion neural-net systems. Neural Computation, Vol. 8, Issue 4, 1996, p. 869-893.

-

Margineantu D. D., Dietterich T. G. Pruning adaptive boosting. Proceedings of the 14th International Conference on Machine Learning, Vol. 97, 1997, p. 211-218.

-

Giacinto G., Roli F. Design of effective neural network ensembles for image classification processes. Image Vision and Computing Journal, Vol. 19, 2001, p. 699-707.

-

Kohavi R., Wolpert D. Bias plus variance decomposition for zero-one loss functions. Proceedings of the Thirteenth International Conference on International Conference on Machine Learning, 1996, p. 275-283.

-

Cunningham P., Carney J. Diversity versus quality in classification ensembles based on feature selection. Machine Learning: ECML 2000, Lecture Notes in Computer Science (Lecture Notes in Artificial Intelligence), Vol. 1810, Berlin, Heidelberg, Springer, 2000.