Abstract

This article addresses the problem of blind source separation, in which the source signals are most often of the convolutive mixtures, and moreover, the source signals cannot satisfy independent identical distribution generally. One kind of prevailing and representative approaches for overcoming these difficulties is joint block diagonalization (JBD) method. To improve present JBD methods, we present a class of simple Jacobi-type JBD algorithms based on the LU or QR factorizations. Using Jacobi-type matrices we can replace high dimensional minimization problems with a sequence of simple one-dimensional problems. The novel methods are more general i.e. the orthogonal, positive definite or symmetric matrices and a preliminary whitening stage is no more compulsorily required, and further, the convergence is also guaranteed. The performance of the proposed algorithms, compared with the existing state-of-the-art JBD algorithms, is evaluated with computer simulations and vibration experimental. The results of numerical examples demonstrate that the robustness and effectiveness of the two novel algorithms provide a significant improvement i.e., yield less convergence time, higher precision of convergence, better success rate of block diagonalization. And the proposed algorithms are effective in separating the vibration signals of convolutive mixtures.

1. Introduction

Blind source separation (BSS) deals with the problem of finding both the unknown input sources and unknown mixing system from only observed output mixtures. BSS has recently become the focus of intensive research work due to its high potential in many applications such as antenna processing, speech processing and pattern recognition [1-3]. The recent successes of BSS might be also used in mechanical engineering [4-7]. In these reported applications for tackling the BSS, there were two kinds of BSS models i.e., instantaneous and convolutive mixture models. The instantaneous mixture models with a simple structure have been described in many papers and books [8-10]. However, when it came to deal with convolutive mixture signals, BSS might face a number of difficulties which seriously hindered its feasibility [11, 12]. There is currently an endeavor of research in separating convolutive mixture signals, yet no fully satisfying algorithms have been proposed so far.

Many approaches have been proposed to solve the convolutive BSS (CBSS) problem in recent years. One kind of prevailing and representative approaches is joint block diagonalization (JBD) which can produce potentially more elegant solution for CBSS in time domain [13]. In the article, we focus on the JBD problem, which has been firstly treated in [14] for a set of positive definite symmetric matrices. And then the same conditions also mentioned in [15]. To solve the JBD problem, Belouchrani have sketched several Jacobi strategies in [16-18]: the JBD problem was turn into a minimization problem which was processed by iterative methods; as a product of Givens rotations, each rotation made a block-diagonality criterion minimum around a fixed axis. Févotte and Theis [19, 20] have pointed out that the behavior of Jacobi approach was very much dependent on the initialization of the orthogonal basis and also on the choice of the successive rotations. Then they proposed some strategies to improve the efficiency of JBD. But there are also several critical constraints: the joint block-diagonalizer is an orthogonal (unitary in the complex case) matrix; the spatial pre-whitening is likely to lead to a larger error and, moreover, this error is unable to correct in the subsequent analysis. In [21] a gradient-based JBD algorithm have been used to achieve the same task but for non-unitary joint block-diagonalizers. This approach suffers from slow convergence rate since the iteration criteria possesses a fixed step size. To improve this shortcoming, some gradient-based JBD algorithms with optimal step size have been provided and studied in [22-24]. However, these algorithms are apt to converge to a local minimum and have low computational efficiency. To eliminate the degenerate solutions of the nonunitary JBD algorithm, Zhang [25] optimized a penalty term based weighted least-squares criterion. In [26], a novel tri-quadratic cost function was introduced, furthermore, an efficient algebraic method based on triple iterations has been used to search the minimum point of the cost function. Unfortunately, this method exists redundancy value and arises error when the mixture matrix is inversed. Some new Jacobi-like algorithms [27, 28] for non-orthogonal joint diagonalization have been proposed, but unfortunately cannot be used to solve the problem of block diagonalization.

Our purpose, here, is not only to tackle the problem of the approximate JBD by discard the orthogonal constraint on the joint block-diagonalizer i.e., impose as few assumptions as possible on the matrix set, but also to propose the JBD algorithms characterized by the merits of simplicity, effectiveness, and computational efficiency. Subsequently, we suggest two novels non-orthogonal JBD algorithms as well as the Jacobi-type schemes. The new methods are an extension of joint diagonalization (JD) algorithms [29] based on LU and QR decompositions mentioned in [30, 31] to the block diagonal case.

This article is organized as follows: the JBD problem is stated in Section 2.1. The two proposed algorithms are derived in section 2.2, whose convergence is proved in Section 2.3. In Section 3, we show how to apply the JBD algorithms to tackle the CBSS problem. Section 4 gives the results of numerical simulation by comparing the proposed algorithms with the state-of-the-art gradient-based algorithms introduced in [23]. In Section 5, the novel JBD algorithms are proved to be effective in separating vibration source of convolutive type, which outperforms JBDOG and JRJBD algorithms [19].

2. Problem formulation and non-orthogonal JBD algorithms

2.1. The joint block diagonalization problem

Let be a set of matrices (the matrices are square but not necessarily Hermitian or positive definite), that can be approximately diagonalized as:

where is a general mixing matrix and the matrix denotes residual noise, denotes complex conjugate transpose (replace it by in real domain). is an block diagonal matrix where the diagonal blocks are square matrices of any size and the off-diagonal blocks are zeros matrices i.e.:

where denotes the th diagonal block of the size such that . In general case, e.g. in the context of CBSS, the diagonal blocks are assumed to be the same size, i.e. and where denotes the null matrix. The JBD problem consists of the estimation of and when the matrices are given. It can be noticed that the JBD model remains unchanged if one substitute by and by , where is a nonsingular block diagonal matrix in which the arbitrary blocks are the same dimensions as . is an arbitrary block-wise permutation matrix. The JBD model is essentially unique when it is only subject to these indeterminacies of amplitude and permutation [24].

To this end, our aim is to present a new algorithm to solve the problem of the non-orthogonal JBD. The cost function with neglecting the noise term suggested in [23] is considered as follows:

The above cost function can be regarded as the off-diagonal-block-error, our aim is to find a non-singular such that the is as minimum as possible. Where is the Frobenius norm and stands for inverse of the matrix (in BSS context, serves as the separating matrix). Considering the square matrix :

where for all are matrices (and ) and two matrix operators and can be respectively defined as:

2.2. Two novel joint block diagonalization algorithms based LU and QR factorizations

Any non-singular matrix admits the LU factorization [30]:

where and are unit lower and upper triangular matrices, respectively. A unit triangular matrix represents a triangular matrix with diagonal elements of one. also admits the QR factorization:

where is orthogonal matrix. Considering the JBD model’s indeterminacies, we note that any non-singular square separating matrix can be represented as these two types of decomposition. Here, we will implement the JBD in real domain (which is the problem usually encountered in BSS) i.e., in a real triangular and orthogonal basis. It is reasonable to consider the decompositions Eq. (4) or (5) and hence replace the minimization problem represented in Eq. (3) by two alternating stages involving the following sub-optimization:

where , , and denote the estimates of , and , respectively. Moreover, we adopt the Jacobi-type scheme to solve Eq. (6) and 7(a), 7(b) via a set of rotations.

The Jacobi matrix of lower unit triangular is denoted as , where the parameter corresponding to the position () i.e., equals the identity matrix except the entry indexed is . In a similar fashion, we define the Jacobi matrix of unit upper triangular with parameter corresponding to the position (). In order to solve Eq. (6) and 7(a), we will firstly find the optimal and in each iteration. For fixed , one iteration of the method consists of

1. Solving Eq. (6) with respect to of , and.

Updating for all (U-stage)

2. Solving Eq. (7a) with respect to of , and.

Updating for all (L-stage)

We herein note that the proposed two non-orthogonal JBD algorithms are all of above-mentioned Jacobi-type, with the only differences on the adopted decompositions (LU or QR) and implementation details. Next, we give the details of the proposed algorithms. Following the Eq. (6), we have:

where is the block dimension, and .

For matrix , denotes a row-vector whose elements are from the th row of indexed by , the is a row vector is defined similarly.

The computation of the optimal in Eq. (8) is:

If or set , i.e. cannot be reduced by the particular .

As for the lower triangular matrices , we have similar result:

where is the block dimension, and :

is a row vector in which element satisfy , rounds the elements of to the nearest integers towards infinity:

The computation of the optimalvalue in Eq. (10):

If or set , i.e. cannot be reduced by the particular . The computation of the optimal parameter requires solving a polynomial of degree 2 in the real domain, which is more effective than other JBD methods that need to solve a polynomial of degree 4, such as JBDOG, JBDORG and JBD-NCG,etc. [22-24].

In the QR algorithm, we consider the QR decomposition of , hence the sub-optimization problem in the Q-stage in Eq. 7(b) is indeed an orthogonal JBD problems which can be solved by Févotte’s Jacobi-type algorithm [19]. Févotte indicated that the behavior of the Jacobi approach was very much dependent on the initialization of the orthogonal basis, and also relied on the choice of the successive rotations. Here, the algorithm is initialized with the matrix provided by joint diagonalization of , and consists of choosing at each iteration the couple ensuring a maximum decrease of criterion .

Now that we have obtained the U-stage and L-stage (Q-stage) for the proposed algorithm, we loop these two stages until convergence is reached. In addition, we note that there would be several ways to control the convergence of the JBD algorithms. For example, we could stop the iterations when the parameter values in each iteration of the U-stage or L-stage (Q-stage) are small enough, which indicates a minute contribution from the elementary rotations, and hence convergence. We may as well monitor the sum off-diagonal squared norms in iteration, and stop the loops when the change in it is smaller than a preset threshold. The values of between two successive complete runs of U-stage and L-stage are usually used as a terminate criteria. Here, we stop the iteration when the values , which can reflect relative change between off-block diagonal and block diagonal. And this criterion can be adapted to all of iterative methods for solving JBD problem, which can also give a more intuitive comparison between these methods. Therefore, this terminate criteria is much more rational and effective. In the following context of our manuscript, we will use one of the following terminate criteria

(st1) ;

(st2) ;

(st3) (mentioned at section 4).

We name the novel JBD approaches based on LU and QR factorizations as LUJBD and QUJBD, respectively, and summarize them as following:

1. Set .

2. U-stage (R-stage): set , for

Find from Eq. (9)

Update and

3. L-stage (Q-stage): set (), for

Find from Eq. (11) ( from [20])

Update ()

and ()

4. If the terminate criteria from (st1), (st2) or (st3) isn’t satisfied completely, then () and go to 2, else end.

We replace each of these dimensional minimization problems by a sequence of simple one-dimensional problems via using triangular and orthogonal Jacobi matrices. Note that for updating , the matrix multiplications can be realized by few vectors scaling and vector. In additions, this will cost fewer time than other method of non-orthogonal JBD [22-24]. And the existence and uniqueness of joint block diagonalization of this cost function has been proved in [20].

2.3. Convergence of the algorithm

By construction, the algorithm ensures decrease of criterion at iteration process. According to Eq. (8) or (10), we have:

Respectively , , and .

Replace from Eqs. (9) or (11):

Only or set , Eq. (12) is putted equal sign, but usually and in CBSS mentioned in Section 3.

Similarly, or has been proved in [20].

At each iteration of the algorithm, the matrix obtained after rotations is thus ‘at least as diagonal as’ matrix at previous iteration. Since every bounded monotonic sequence in real matrix domain, the convergence of our algorithm is guaranteed.

3. Application to CBSS

The problems of convolutive BSS (CBSS) occur in various applications. One typical application is in blind separation of vibration signals, which is fully studied in this paper for detecting the solution of the CBSS problems. The CBSS consists of estimating a set of unobserved source signals from their convolutive mixtures without requiring a priori knowledge of the sources and corresponding mixing system. Then the CBSS can be identified by means of JBD of a set of covariance matrices. We consider the following discrete-time MIMO model [25]:

where is the discrete time index, denotes FIR filter’s length. denotes source signal vector with the source numbers are , and is the mixing signal vector obtained byobservation signals. In the mixing linear time-invariant system, the matrix-type impulse response consists of channel impulse responses . Aiming to the received signal on the th array element, we take the sliding window and constitute a column vector:

Then putting the array element processed by sliding window together and defining the observed signal vector:

Hence:

where , and:

is block element of which matrix dimension is , and:

The following assumptions concerning the above mixture model Eq. (14) have to be made to ensure that it is possible to apply the proposed algorithms to CBSS [25].

Assumption 1. The source signals are zero mean, individually correlated in time but mutually uncorrelated, i.e., for all time delay , the cross correlation functions , , and the auto-correlation functions , . Meanwhile, the source signals have a different auto-correlation function.

Assumption 2. The sensor noises are zero mean, independent identically distributed with the same power . The noises are assumed to be independent with the sources.

Assumption 3. The mixing matrix is assumed as column full rank. This requires that the length of the sliding window satisfies .

Assumption 1 is the core assumption. As is shown in [25], this assumption enables us to separate the sources from their convolutive mixtures by diagonalizing the second-order statistic of the reformulated observed signals (this will be addressed below). Assumption 2 enables us to easily deal with the noise and Assumption 3 guarantees that the mixing system is invertible, therefore it is a necessary condition that the source signals can be completely separated.

Under these assumptions, the spatial covariance matrices of the observations satisfy:

where is the successive time delays, is computed according to [26]. It can be deduced from the above assumptions that in Eq. (15) the matrices take the following forms, respectively:

where the block matrices in and have the following form:

where , have the similar form, which is the matrix. According to the Eq. (15), a group of matrices which can be block diagonalized, and satisfy , have diagonalization structure. Hence, The JBD method mentioned in section 2.2 can be used to solve CBSS problem. Once the joint block diagonalizer is determined, the recovered signals are obtained up to permutation and a filter by:

It is worth mentioning that the indeterminacies of amplitude and permutation exist in JBD algorithms correspond to the well-known indeterminacies in CBSS. The correlation matrices is actually replaced by their discrete time series estimate. To acquire a good estimate of the discrete correlation matrices, we may divide the observed sequences (the output of the reformulated model (15)) into the appropriate length of the sample.

4. Numerical simulations

Simulations are now provided to illustrate the behavior and the performance of the proposed JBD algorithms (LUJBD, QRJBD). We will also compare the proposed algorithms with the JBDOG, JBDORG in the robustness and efficiency by generating random dates. To achieve these purposes, a set of real block-diagonal matrices (for all ) are devised from random entries with a Gaussian distribution of zero mean and unit variance. Then, random noise entries with a Gaussian distribution of zero mean and variance will be added on the off-diagonal blocks of the previous matrices . A signal to noise ratio can be defined as .

To measure the quality of the different algorithms, the following performance index is used [22]:

where () denotes the th block matrix of . This index will be used in the CBSS, which can take into account the inherent indetermination of the BSS problem. It is clearly that the smaller the index performance , the better the separation quality. Regarding to the charts, is often given in dB i.e., . In all the simulations, the true mixing matrix or has been randomly chosen with zero mean and unit variance.

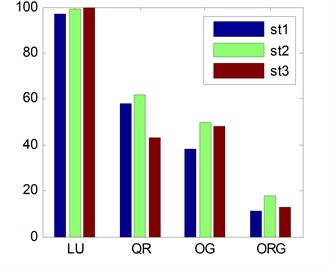

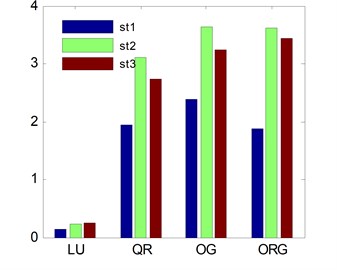

In Fig. 1 and Fig. 2, we focus on the exactly determined case 9, 3, 20, 60 dB, the results have been averaged over 100 runs. Fig. 1 represents the percentage of successful runs, where a run is declared successful w.r.t. the following criteria satisfy: , (st1), (st2). Fig. 2 represents the average running time per successful convergence. Comparing the LUJBD and QRJBD approaches with the state-of-the-art JBDOG, and JBDORG., we can confirm that the approaches proposed in Section 2.2 improve the performance of JBD better than the gradient-based methods: the LUJBD and QRJBD methods converge to the global minimum more frequently, see Fig. 1, and faster see Fig. 2 than JBDOG, JBDORG. Under the same terminated criteria, it can be observed that the LUJBD and QRJBD methods also outperform the JBDOG, JBDORG methods, and the LUJBD method show the best performance. We can also conclude that the sensitivity of the different convergence termination criteria for different JBD methods is diverse and, moreover, the percentage of successful runs and average running time are also varying. In other words, we should choose appropriate terminate criteria which is able to obtain the accuracy of block diagonalization, goodness of success rate and convergence speed.

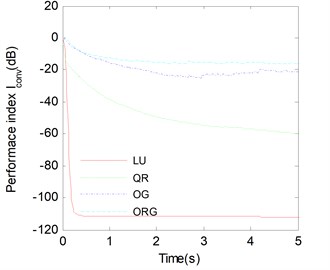

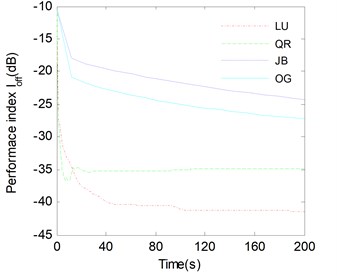

In Fig. 3 we focus on the exactly determined case 9, 3, 20, 60 dB, the results have been averaged over 100 runs. Because various approaches have different iteration time of each step, Here, we consider all methods converge to the same time, which is more reasonable than converge to certain iteration steps mentioned in [23, 25]. The evolution of the performance index versus the convergence time shows that the convergence performance of the LUJBD and QRJBD methods is better than the JBDOG and JBDORG method. The LUJBD and QRJBD algorithms cost less time when performance index reaches a stable convergence level, and have smaller value of performance index when all algorithms converge to same time. In other words, the BSS methods proposed in this paper possess less convergence time, higher precision of convergence, faster convergence speed.

Fig. 1The percentage of successful runs

Fig. 2The average running time per successful convergence

Fig. 3Average performance index Iconv versus the convergence time for LUJBD, QLJBD, JBDOG, JBDORG algorithms. SNR=60 and K= 20 matrices

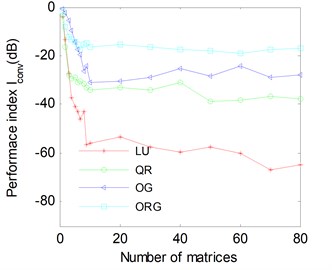

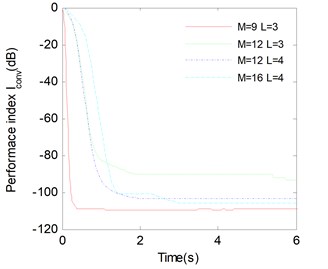

In Fig. 4, we discuss the number of the matrices how to affect the performance. The results have been averaged over 100 runs. We devise the same stop criteria i.e., one of the terminate criteria (st1), (st2) and (st3) is satisfied. We set 9, 3, 60 dB. The following observations can be made: the more matrices to be joint block-diagonalized, the better performance we can obtain. But the computational cost also increases when the number of matrix rises. Therefore, the choice of matrix number should combine the accuracy of JBD algorithms with complexity of JBD algorithms. From Fig. 4, the matrix number 20 is a better choice. The LUJBD algorithm with better convergence turned out to be slightly superior to other JBD algorithms.

Fig. 4Average Iconv versus the number of matrices for LUJBD, QLJBD, JBDOG, JBDORG algorithms. SNR= 60 and avarage 100 runs

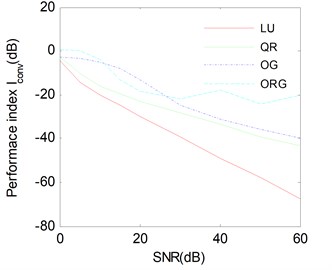

Fig. 5Average Iconv versus SNR for LUJBD, QLJBD, JBDOG, JBDORG algorithms. K= 20 and avarage 100 runs

With the same stop criteria and other assumptions in Fig. 4, one can observe from Fig. 5 that when the SNR grows, the average performance of each algorithm becomes better except few fluctuation points. The noise sensitivity of LUJBD is slightly higher that the remaining three kinds of methods, however, for a given value of SNR, the average performance index of LUJBD and QLJBD is always better than that of two gradient-based methods.

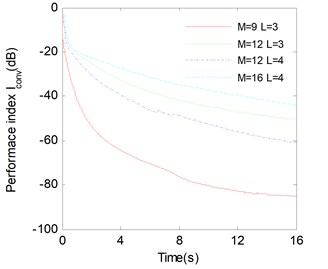

Finally, in Fig. 6 and Fig. 7, we study separation performance versus matrix dimension and the block dimension for both algorithms ( 20, 60 dB), the results have been averaged over 100 runs. One can observe that in the same block dimension case, the larger the matrix dimension , the weaker the estimation accuracy of mixing matrix. And in the same matrix dimension case, the larger the block dimension , the better the estimation accuracy of mixing matrix. Therefore, we can improve the performance index by increasing the number of the matrix and the block dimension when the dimension of target matrix increases.

Fig. 6Average Iconv versus the convergence time with different M and L for LUJBD algorithms. SNR=60 and K= 20 matrices

Fig. 7Average Iconv versus the convergence time with different M and L for QLJBD algorithms. SNR=60 and K= 20 matrices

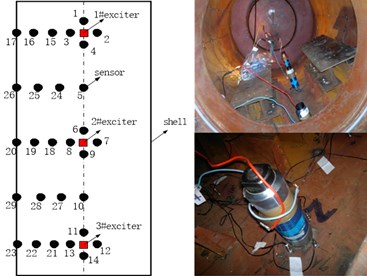

5. Applying CBSS to the vibration source separation

The experimental model is a double-stiffened cylindrical shell depicted in Fig. 8, which is used to simulate the cabin model. Underwater vibration tests of the double-stiffened cylindrical shell were carried out in anechoic water pool with a length of 16 meters, a width of 8 meters and a height of 8 meters. In the double-stiffened cylindrical shell, three exciters were arranged in the front part (No. 1 excitation source), middle part (No. 2 excitation source), rear part (No. 3 excitation source), respectively, which were used to simulate vibration sources of the internal equipment. Twenty-nine vibration acceleration sensors were arranged in the inner shell, and four accelerometers containing abundant vibration information were arranged in the vicinity of each excitation point. The location of exciters and acceleration sensors were shown in Fig. 9. Only the vertical excitation and response were considered in this test, and the model was located underwater 3.75 m. During the test, three exciters were controlled on the shore, and each exciter was turned on separately or multiple exciters were operated simultaneously according to different test requirements. Three exciters launched a continuous sinusoidal signal with different excitation frequencies and same energy, the frequency was 5 kHz, 4 kHz, 3 kHz, corresponding to No. 1 exciter, No. 2 exciter and No. 3 exciter. The vibration data was collected when the exciter was in stable operation. The sampling frequency was 16384 Hz and the sampling time was 10 s.

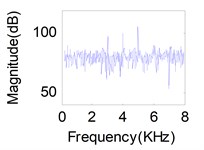

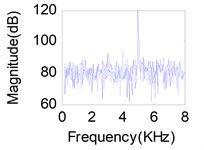

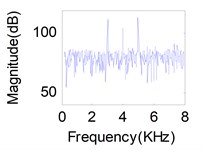

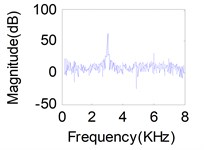

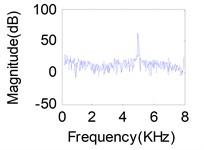

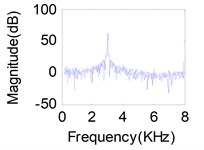

Fig. 11(d), (e), (f) show the mixture signals obtained by three sensors (17, 20, 23) on the inner shell when all of the exciters act simultaneously. It is obvious that the mix-signals with mutual spectrum aliasing are not able to represent real vibration characteristics and be utilized directly. We can also demonstrate that a mixture of vibrations is most often of the convolutive type which is not prone to be tackled, and moreover, the independence among the vibration sources is often not satisfactory strictly. Therefore, it is difficult to separate mechanical vibration source using traditional source separation methods. However, we propose the Jacobi-type JBD algorithms based on the LU or QR factorizations in this article, which can overcome above shortcomings effectively.

To ensure the stability of the solution, the terminate criteria is selected. We select observed signals 5 i.e., sensors 17, 20, 23, 26, 29,and source signals 3. The model parameters including , , are selected to guarantee that the solution accuracy satisfy –35 dB. The filter length 13, the sliding window 17 and a set of covariance matrices 30 with a time lag taking linearly spaced valued.

In Fig. 10, the evolution of the performance index versus the convergence time shows that the convergence performance of the LUJBD and QRJBD methods are superior to the JBDOG and JRJBD methods [20]. The LUJBD and QRJBD algorithms cost less time when –20 dB, and have smaller performance index when the convergence time is same. In other words, the BSS methods proposed in this paper possess higher precision of convergence, faster convergence speed. The low computational accuracy and efficiency of the latter two algorithms are mainly due to following reasons: (1) the JBDOG algorithm generally suffers from slow convergence rate and is apt to converge to a local minimum; and the accuracy of blind source separation is often hindered by the inversion of ill-conditioned matrices. (2) the joint block-diagonalizer of JRJBD algorithm is an orthogonal matrix, the spatial pre-whitening which is likely to lead to a larger error need to be applied. Moreover, this error is unable to correct in the subsequent analysis.

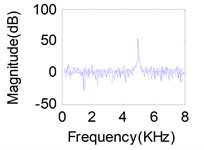

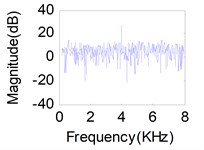

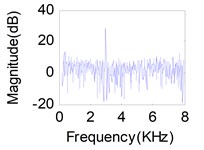

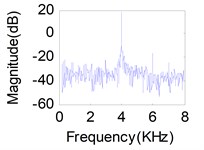

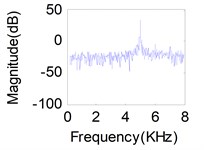

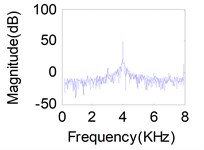

Fig. 11(a), (b), (c) represents source signals acquired by the acceleration sensors near excitation point (Here, we choose acceleration sensors 1, 8, 14 which have a higher signal-to-noise ratio) when each of the exciter act respectively. Comparison between the recovered primary sources – see Fig. 11(g), (h), (i) for LUJBD model and (j), (k), (l) for QRJBD model – with the true one shows that the proposed separation algorithms only subjected to indeterminacies of the permutation and amplitude are effective.

Fig. 8The experimental cabin model

Fig. 9The location of exciters and acceleration sensors

Fig. 10Average performance index Iconv versus the convergence time for four algorithms. K= 30, L'= 13, P= 17

Fig. 11Separation results for known channel-Sources: a) s1, b) s2, c) s3. Mixtures: d) x1, e) x2, f) x3. Separation sources for LUJBD: g) s1', h) s2', i) s3'. Separation sources for QLJBD: j) s1'', k) s2'', l) s3''

a)

b)

c)

d)

e)

f)

g)

h)

i)

j)

k)

l)

6. Conclusions

In this article, to solve the convolutive BSS (CBSS) problem, we present a class of simple Jacobi-type JBD algorithms based on the LU or QR factorizations. Using Jacobi-type matrices we can replace high dimensional minimization problems with a sequence of simple one-dimensional problems. The two novel methods named LUJBD and QRJBD are no more necessarily orthogonal, positive definite or symmetric matrices. In addition, we propose a novel convergence criteria which can reflect relative change between off-block diagonal and block diagonal. And this criterion can be adapted to all of iterative methods for solving JBD problem, which can also give a more intuitive comparison between different methods. The computation of the optimal parameter requires solving a polynomial of degree 2 in the real domain in LUJBD and QRJBD methods, which is more effective than other JBD methods that need to solve polynomial of degree 4. Therefore, the two new algorithms will cost fewer times than other non-orthogonal JBD methods, and moreover, the convergence of these two algorithms is also guaranteed.

A series of comparisons of the proposed approaches with the state-of-the-art JBD approaches (JBDOG and JBDORG) based on gradient algorithms are implemented by varieties of numerical simulations. The results show that the LUJBD and QRJBD methods converge to the global minimum more frequently, and faster than JBDOG, JBDORG. Choosing appropriate terminate criteria is beneficial to obtain the accuracy of block diagonalization, goodness of success rate and convergence speed. It can be readily observed that the more target matrices selected to be joint block-diagonalized, the better performance we can obtain. But the computational cost also increases when the number of matrix rises. Therefore, the choice of matrix number should combine algorithm accuracy with complexity. We can also improve the performance index by increasing the block dimension and decreasing the matrix dimension. Then the two novel JBD algorithms and the JBDOG, JRJBD methods for separating practical vibration sources are studied. We can conclude that the convergence performance and accuracy of the LUJBD and QRJBD methods are superior to the JBDOG and JRJBD methods. Finally, Comparison the recovered primary sources with the true one demonstrates the validity of the proposed algorithms for separating the vibration signals of convolutive mixtures.

References

-

Castell M., Bianchi P., Chevreuil A., et al. A blind source separation framework for detecting CPM sources mixed by a convolutive MIMO filter. Signal Processing, Vol. 86, 2006, p. 1950-1967.

-

Zhang Y., Zhao Y. X. Modulation domain blind speech separation in noisy environments. Speech Communication, Vol. 55, Issue 10, 2013, p. 1081-1099.

-

Pelegrina G. D., Duarte L. T., Jutten C. Blind source separation and feature extraction in concurrent control charts pattern recognition: novel analyses and a comparison of different methods. Computers and Industrial Engineering, Vol. 92, 2016, p. 105-114.

-

Popescu T. D. Blind separation of vibration signals and source change Detection: Application to machine monitoring. Applied Mathematical Modelling, Vol. 34, 2010, p. 3408-3421.

-

McNeill S. I., Zimmerman D. C. Relating independent components to free-vibration modal responses. Shock and Vibration, Vol. 17, 2010, p. 161-170.

-

Lee D. S., Cho D. S., Kim K., et al. A simple iterative independent component analysis algorithm for vibration source signal identification of complex structures. International Journal of Naval Architecture and Ocean Engineering, Vol. 7, Issue 1, 2015, p. 128-141.

-

Li Y. B., Xu M. Q., Wei Y., et al. An improvement EMD method based on the optimized rational Hermite interpolation approach and its application to gear fault diagnosis. Measurement, Vol. 63, 2015, p. 330-345.

-

Popescu T. D. Analysis of traffic-induced vibrations by blind source separation with application in building monitoring. Mathematics and Computers in Simulation, Vol. 80, 2010, p. 2374-2385.

-

Babaie-Zadeh M., Jutten C. A general approach for mutual information minimization and its application to blind source separation. Signal Processing, Vol. 85, 2005, p. 975-995.

-

Jing J. P., Meng G. A novel method for multi-fault diagnosis of rotor system. Mechanism and Machine Theory, Vol. 44, 2009, p. 697-709.

-

Antoni J. Blind separation of vibration components: principles and demonstrations. Mechanical Systems and Signal Processing, Vol. 19, 2005, p. 1166-1180.

-

Rhabi M. E., Fenniri H., Keziou A., et al. A robust algorithm for convolutive blind source separation in presence of noise. Signal Processing, Vol. 93, 2013, p. 818-827.

-

Bousbia-Salah H., Belouchrani A., Abed-Meraim K. Blind separation of convolutive mixtures using joint block diagonalization. International Symposium on Signal and its Applications(ISSPA), Kuala Lumpur, Malaysia, 2001, p. 13-16.

-

Flury B. D., Neuenschwander B. E. Simultaneous diagonalization algorithm with applications in multivariate statistics. International Series of Numerical Mathematics, Vol. 19, 1994, p. 179-205.

-

Pham D. T. Blind separation of cyclostationary sources using joint block approximate diagonalization. Lecture Notes in Computer Science, Vol. 4666, 2007, p. 244-251.

-

Belouchrani A., Amin M. G., Abed-Meraim K. Direction finding in correlated noise fields based on joint block-diagonalization of spatio-temporal correlation matrices. IEEE-SP Letters, 1997, p. 266-268.

-

Belouchrani A., Abed-Meraim K., Hua Y. Jacobi like algorithms for joint block diagonalization: Application to source localization. Proceedings of IEEE International Workshop on Intelligent Signal Processing and Communication Systems, Melbourne, 1998.

-

Abed-Meraim K., Belouchrani A. Algorithms for joint block diagonalization. 12th European Signal Processing Conference, Vienna, Austria, 2004, p. 209-212.

-

Févotte C., Theis F. J. Pivot selection strategies in Jacobi joint block-diagonalization. Lecture Notes in Computer Science, Vol. 4666, 2007, p. 177-184.

-

Févotte C., Theis F. J. Orthonormal Approximate Joint Block-Diagonalization. Technical Report GET/Telecom Paris 2007D007, 2007, p. 1-24.

-

Ghennioui H., Fadaili E. M., Thirion Moreau N., et al. A non-unitary joint block diagonalization algorithm for blind separation of convolutive mixtures of sources. IEEE Signal Processing Letters, Vol. 14, Issue 11, 2007, p. 860-863.

-

Ghennioui H., Thirion Moreau N., Moreau E., et al. Non-unitary joint-block diagonalization of complex matrices using a gradient approach. Lecture Notes in Computer Science, Vol. 4666, 2007, p. 201-208.

-

Ghennioui H., Thirion-Moreau N., Moreau E., et al. Gradient-based joint block diagonalization algorithms: application to blind separation of FIR convolutive mixture. Signal Processing, Vol. 90, 2010, p. 1836-1849.

-

Nion D. A tensor framework for nonunitary joint block diagonalization. IEEE Transactions on Signal Processing, Vol. 59, Issue 10, 2011, p. 4585-4594.

-

Zhang W. T., Lou S. T., Lu H. M. Fast nonunitary joint block diagonalization with degenerate solution elimination for convolutive blind source separation. Digital Signal Processing Vol. 22, 2012, p. 808-819.

-

Xu X. F., Feng D. Z., Zheng W. X. Convolutive blind source separation based on joint block Toeplitzation and block-inner diagonalization. Signal Processing, Vol. 90, 2010, p. 119-133.

-

Zhang W. T., Lou S. T. A recursive solution to nonunitary joint diagonalization. Signal Processing, Vol. 93, 2013, p. 313-320.

-

Cheng G. H., Li S. M., Moreau E. New Jacobi-like algorithms for non-orthogonal joint diagonalization of Hermitian matrices. Signal Processing, Vol. 128, 2016, p. 440-448.

-

Zeng Feng T. J. Q. Y. Non-orthogonal joint diagonalization algorithm based on hybrid trust region method and its application to blind source separation. Neurocomputing, Vol. 133, 2014, p. 280-294.

-

Afsari B. Simple LU and QR based non-orthogonal matrix joint diagonalization. Lecture Notes in Computer Science, Vol. 3889, 2006, p. 1-7.

-

Gong X. F., Wang K., Lin Q. H., et al. Simultaneous source localization and polarization estimation via non-orthogonal joint diagonalization with vector-sensors. Sensors, Vol. 12, 2012, p. 3394-3417.

About this article

The work described in this paper was supported by the National Natural Science Foundation of China (No. 51609251). Research on the identification of cross-coupling mechanical vibration sources of warship based on transfer path analysis.